Vave

Vave, a 3-week long project done in collaboration with Suyash Sinha, is an exploration of mixed media interaction techniques by creating a virtual gesture-based instrument. The project’s motivation was to learn to work with augmented/virtual/mixed reality and create an enjoyable musical experience.

To create an enjoyable musical experience while exploring interactions in augmented/virtual reality.

The final product was a handy instrument played by waving and making other hand gestures over it. The physical instrument was to have a tangible mode of interaction. It was finally interfaced using Unity with visualizations that could be seen in VR for visual feedback.

The Instrument

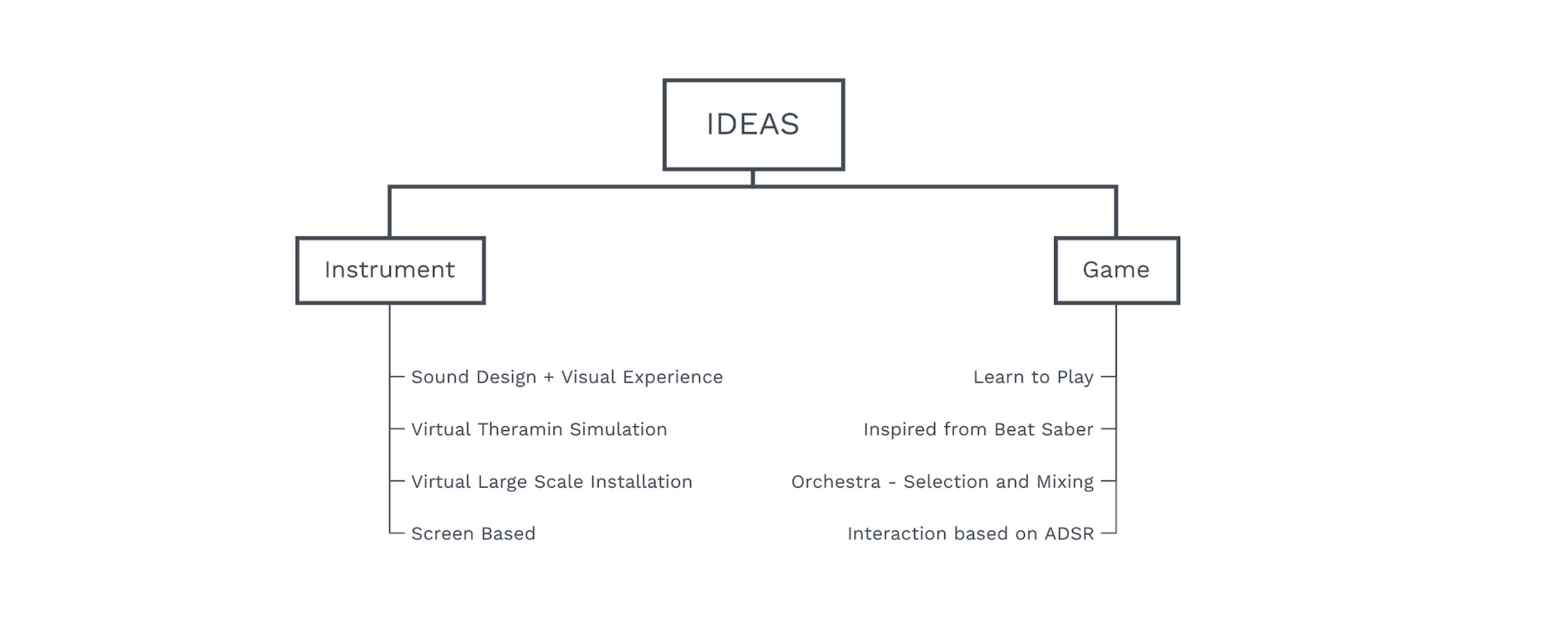

To create an enjoyable music experience, we started by learning about

recent technologically driven musical instruments, games, and

experiences. The first challenge that we faced was to define whether we

were going to create an instrument or a game. We looked at Beat-Saber (

an existing musical game in virtual reality). Initially, we came up with

some ideas on whether to carry forward with creating an instrument or a

game.

After speculating a bit about the idea of an instrument, we selected the

concept of creating an instrument.

Initial Ideas

To create an enjoyable experience, we again dived deeper and looked for existing technical music instruments and bodily experiences to create music. The inspirations for digital musical instruments were of coretet and theremin.

Coretet is a set of dynamic gesture-driven, musical instruments for

virtual reality performances. They have created virtual string and

percussion instruments like cello, violin, and an orb that can be played

using a virtual reality headset and controllers.

The theremin is an electronic musical instrument controlled without physical contact by the thereminist (performer). The instrument's controlling section usually consists of two metal antennas that sense the relative position of the thereminist's hands and control oscillators for frequency with one hand and amplitude (volume) with the other. The electric signals from the theremin are amplified and sent to a loudspeaker.

The video from Yago De Quay about Augmented Reality Musical Instrument has a very interesting concept of controlling the song/music parameters by hand movements using motion capture technology.

We also looked at different experiences like piano-tap-dance, where the players played a huge piano by dancing on the notes to play it.

Finally, we had an idea of creating a musical instrument that is solely gesture and emotion-driven. This can be explained by this video of Mr. Bean, where he uses emotions to drive and conduct the choir. Wouldn’t it be awesome to play an instrument like that someday!

After getting inspired by looking at some wild things on the internet,

we decided to also look into the technical part of the process and

explore various media. So we started exploring different ways of

building the instrument simultaneously. We had a lot of fun trying to

learn about and quickly prototype different interaction techniques in

AR/VR/MR. As we did not have access to existing controllers, we had to

look at other alternatives.

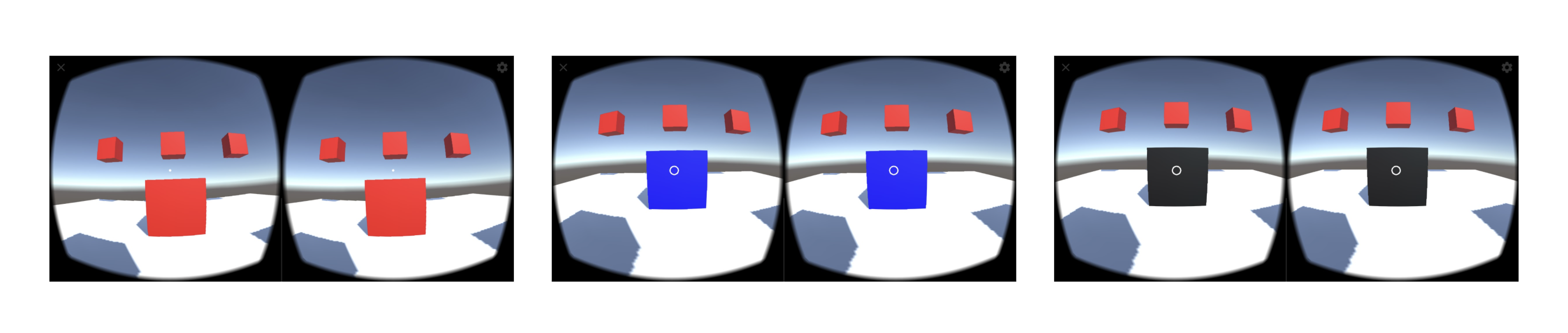

VR Gaze with Raycast Reticle : A simple inbuilt interaction but moving around a lot to point the phone at elements in space could be tiring.

In our next prototype, some keys pop up in the AR space and when these are pressed on the screen, a song is played. The learning from this exercise was; although in AR, the experience was like any other 2D application and not enjoyable.

We also prototyped wearable AR by making a stereoscopic AR camera in Unity. This helped in having a tracker-based AR with virtual buttons, at the same time keeping your hands free, unlike hand-held AR using a mobile device. As the hands were free, we could add some virtual buttons in the 3D world space that could be interacted with using our hands. The learning from this exercise was; interactions became more immersive but the virtual buttons only work when visible through the camera.

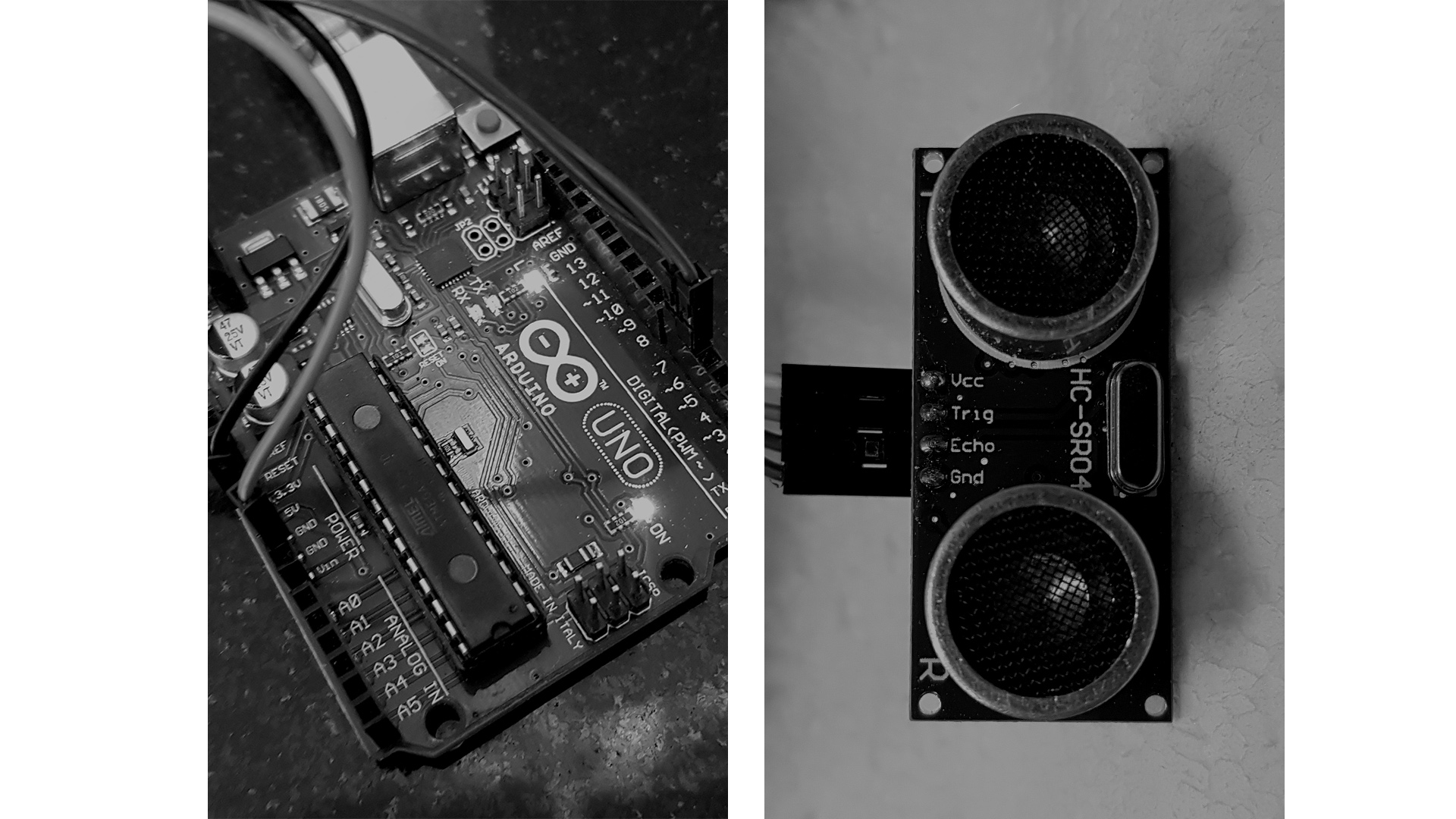

We also tried to make our own controllers with simple sensors like accelerometer, gyroscope, and ultrasonic sensor and map hand movements recorded through them to different musical variables. The learning from this exercise was; as the sensors were independent from AR camera, one could look anywhere while interacting with the controllers. This also enabled a more tangible mode of interacting and we decided to go further with this.

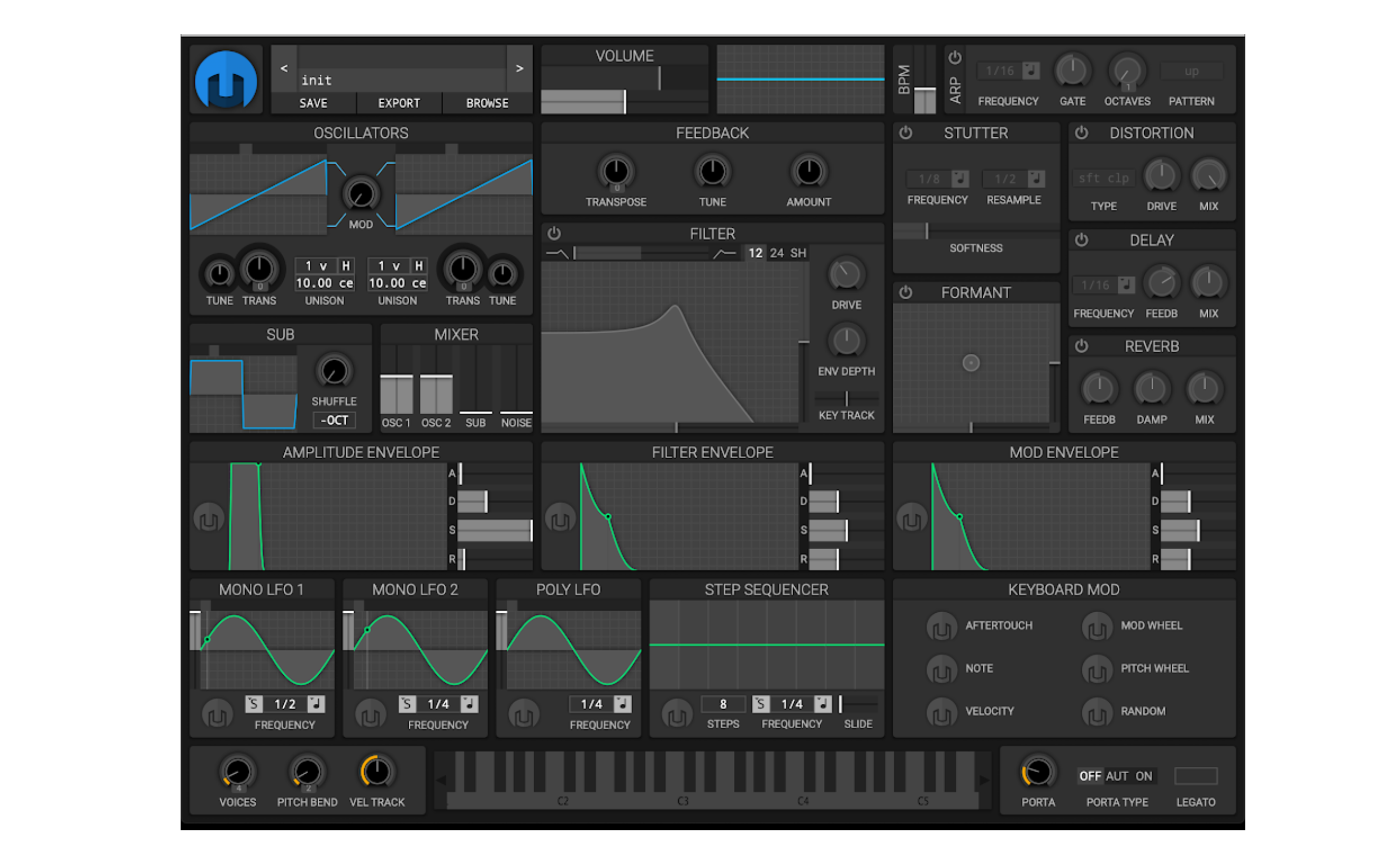

Another challenge was to produce musical sounds in Unity based on the feedback from sensors/ virtual buttons/ raycast. We used the Audio Helm plugin, which is a live audio synthesizer, sequencer, and sampler for Unity that gives you the tools to create dynamic sound effects and generative music. We then tweaked the code to control audio helm variables according to the input from sensors to make it interactive with gestures.

Audio Helm VST

Simultaneously we also explored creating visualizations for the instrument that will be visible on the VR headset. One of the visualizations was a large number of instances of a sphere with a glowing material. The amount of glow, gravity, and scatter were linked according to different frequencies. These visualizations would later serve while creating an immersive experience for the player.

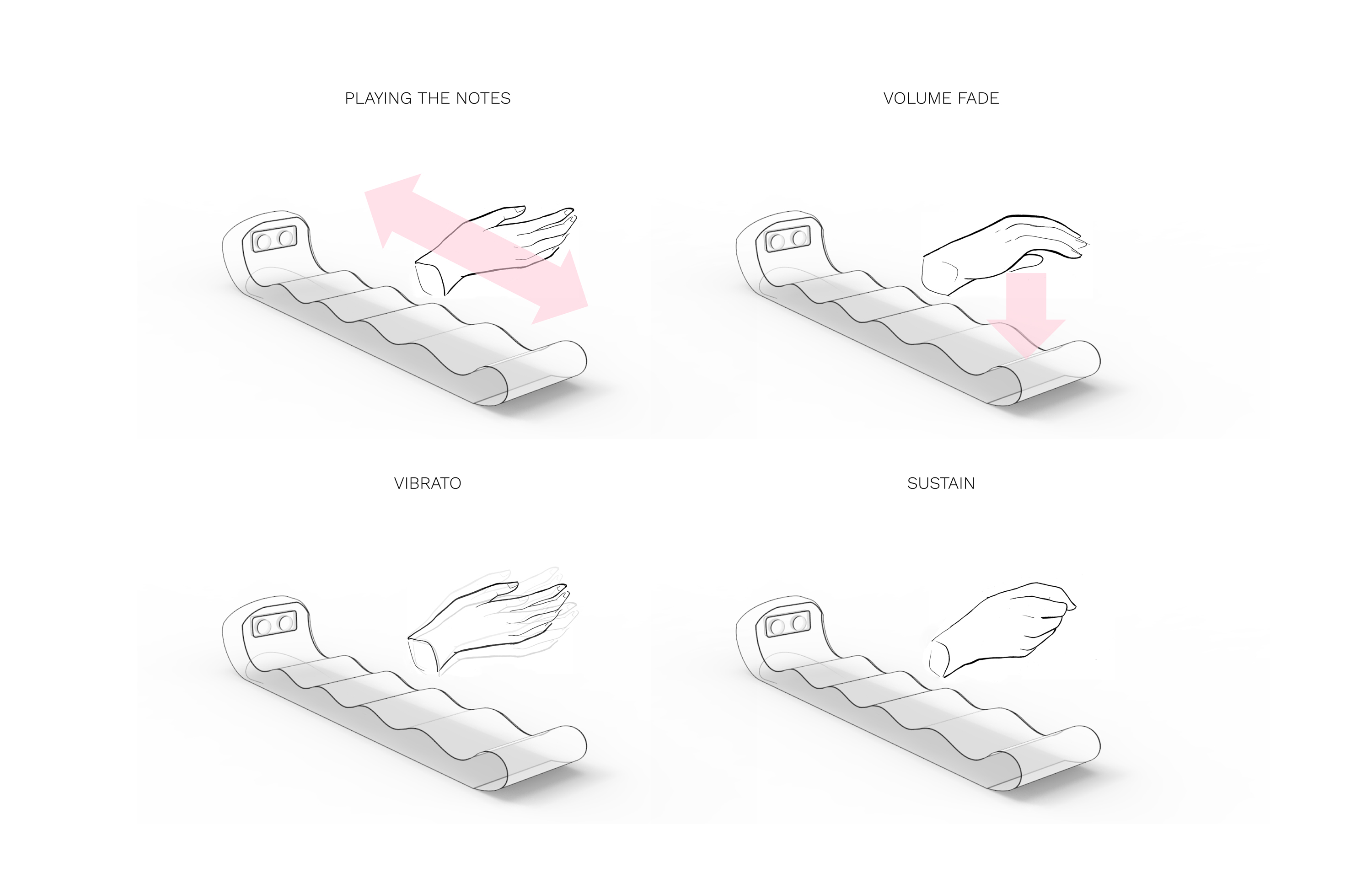

For a holistic seamless experience, we not only had to figure out the technical aspects but also think about the usability and experience design aspects. In order to decide on the gestures that will be intuitive and easy to perform to control the music, we did some role play and act-it-out by simply doing actions that pretend to control existing music. These wizard of oz prototypes helped understand the different hand movements that are enjoyable and natural for controlling different music variables like pitch, volume, sustain, vibrato. We also took inspiration from the Mr. Bean video.

How to Play: Using gestures to control parameters.

For connecting the intended design with the technology, we had to figure out the implementation for the different gestures. As most of the gestures were free-flowing hand gestures, we decided to go with a wearable glove with sensors. A 6 DOF accelerometer was used to get the rotational and transnational values of the hand movement. We added some modalities with buttons on the fingers. One could pinch with a thumb and index finger and move the hand as if holding and moving a virtual slider to control any variable.

Alternate Concept for a Wearable Instrument

One of the decisions that we had to make was whether to put a smooth transition between two semitones (like in a theremin) or to jump directly between semitones. Finally, after testing both the scenarios, we decided to put just the semitones as the notes between two semitones were difficult to play.

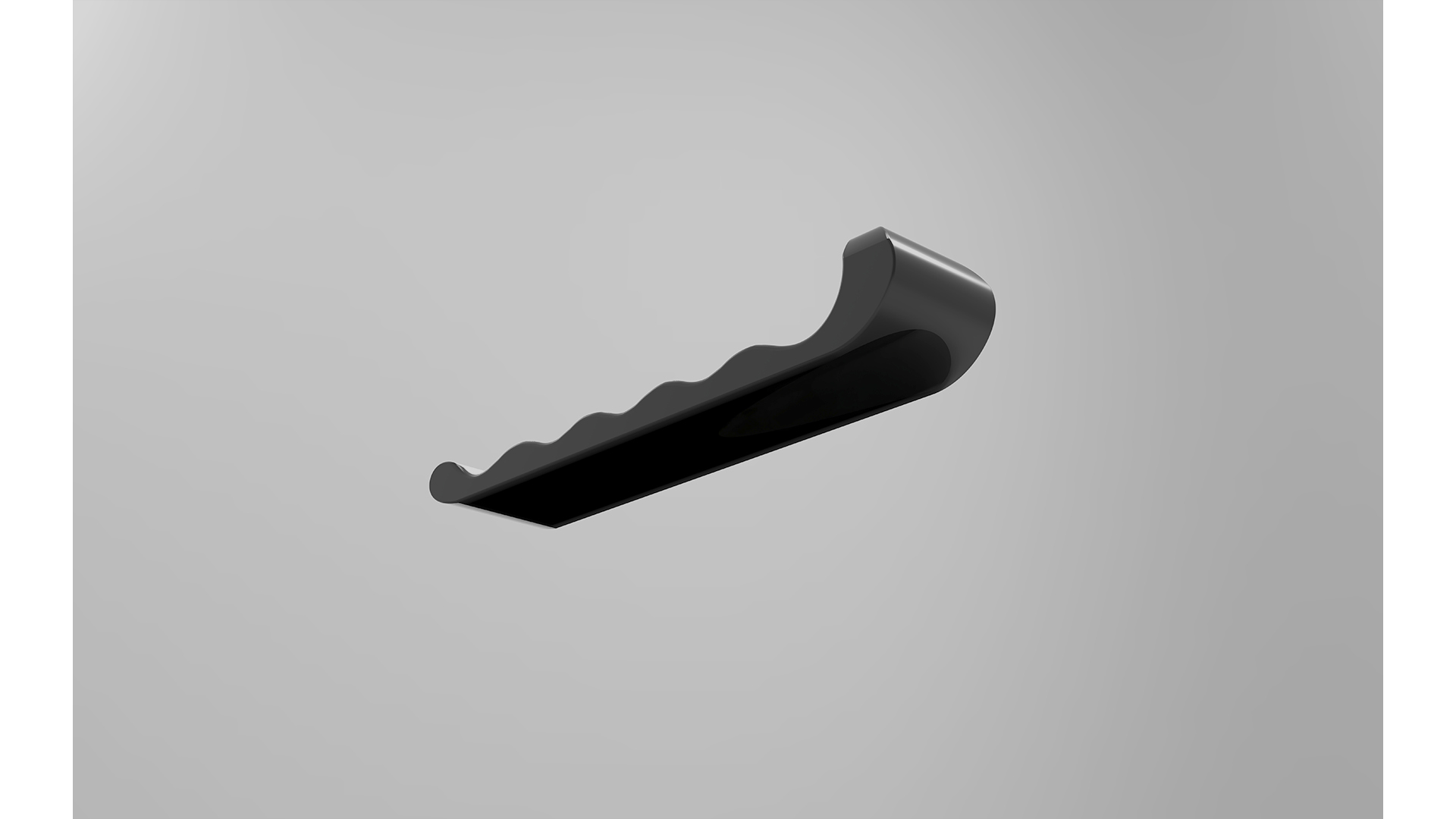

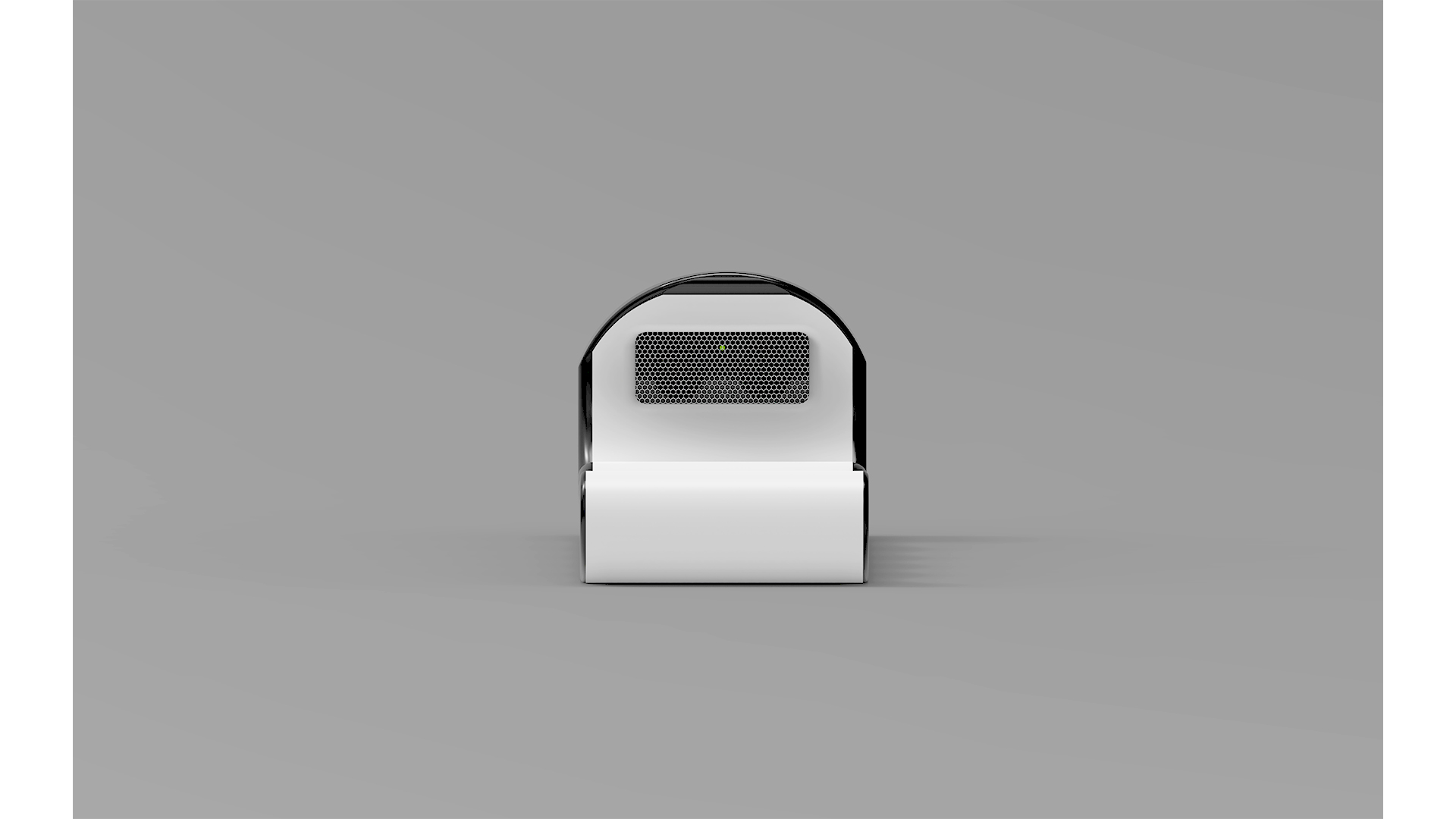

For our concept so far, we got feedback on including something tangible to interact with. Having intimacy and interacting with another instrument makes the experience of playing music more enjoyable and also something that we are used to. Considering this, we designed a simple wave-like form that is coherent with the kind of interaction we want. It’s a light instrument that can be held with one arm like a child and played with by waving the other hand. It contains an ultrasonic sensor at one end, and its readings have been calibrated to play different notes of a scale on each crest and trough of the form. For the prototype, the instrument contains one octave of G Major scale.

Top, Bottom and Front View of the Instrument.

Final Concept with headset and headphones for the full experience.

The main component of the instrument was the ultrasonic sensor that measured the distance of the hand from one end of the instrument. This was recorded using an Arduino Uno, and the Arduino data was sent to Unity using serial port communication. In Unity, we used C# and Audio Helm to play a musical note based on the position of the hand. Another component was an accelerometer that recorded the angle of the hand to control the volume (flatten the hand on the instrument to lower volume and raise upright to increase.) The accelerometer also measured the vibrations of the hand at a position to play vibrato. We tried to optimize the code by setting an optimum range of the sensor and delays in sending data to Unity, so we don’t overwhelm unity with a lot of data creating a lag at the same time sending enough data to keep it precise to the hand movements. However, there was still some amount of lag with all the sensors and data coming in, so we decided to just go with the ultrasonic sensor for our MVP to play different notes with the hand movements.

Tools used.

We also needed some visual feedback for a complete experience. All this

was interfaced using Unity itself. We used a sphere in the world space

in VR that moves with the movement of the hand (the position of the

sphere corresponds to the data from the ultrasonic sensor). On the path

of the sphere, we laid some bars. When the sphere collides with a bar,

it glows/ ripples, and a musical note corresponding to the bar is played

using Audio Helm. For the working prototype, we kept it simple, but we

also created richer visualizations that could enhance the experience of

playing the instruments.

As the instrument is in VR, we decided to propose the possibility of a

collaborative virtual environment where different people can come

together for jamming. “Near far wherever you are, I believe in our VAVE,

the show must go on and on…”